The future of black box optimization benchmarking is procedural

February 16, 2025

Recent work by Saxon et al. highlights the need for dynamic benchmarks, and I think procedural content generators might provide an answer.

Recent work by Saxon et al. highlights the need for dynamic benchmarks, and I think procedural content generators might provide an answer.

Starting a map of high-dimensional Bayesian optimization (of discrete sequences) using small molecules as a guiding example

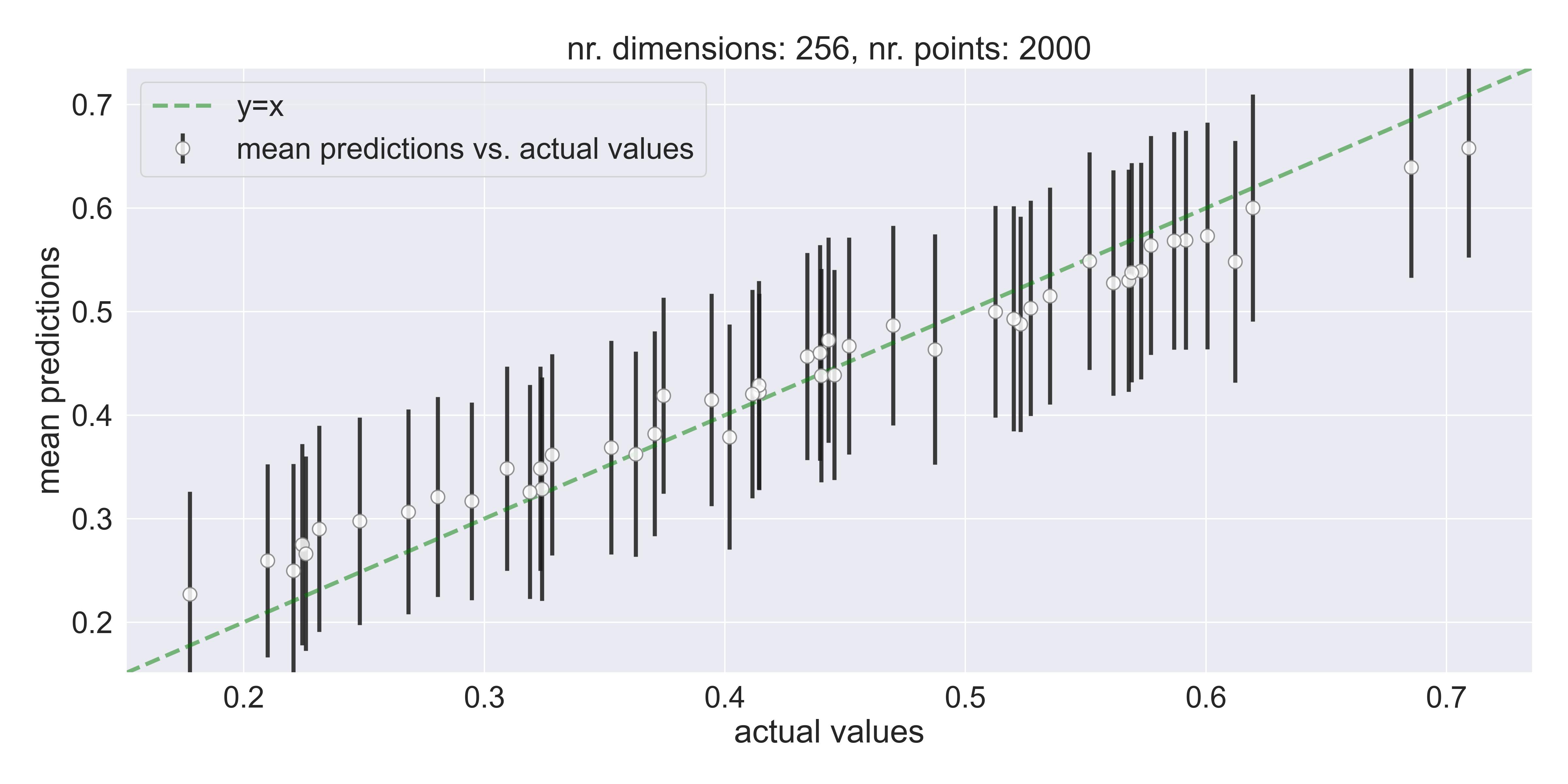

This blogpost implements a small experiment to check when and how Gaussian Processes fail in high dimensions, and explains recent research on the subject.

An introduction to Bayesian optimization using Gaussian Processes.

Representing levels from Super Mario Bros as strings, and learning a continuous representation using Variational Autoencoders.

An essay on stuttering, my experience, and a little bit about the neuroscience behind it.

a Rosetta stone, showing how basic concepts in measure theory (e.g. a measurable functions) correspond to concepts in probability theory (e.g. random variables).

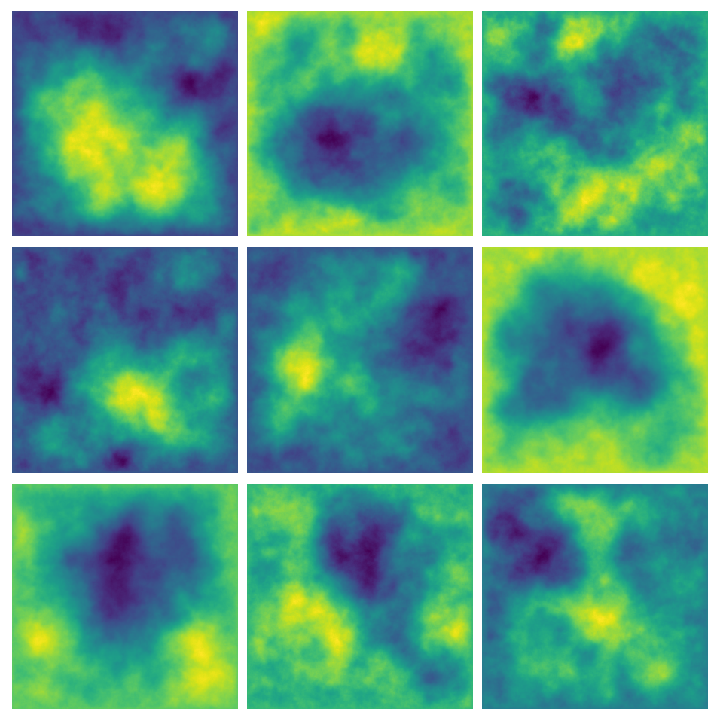

In this blogpost I share a technique to generate random priors from Gaussian noise (by having the noise model the slope/curvature of the prior).

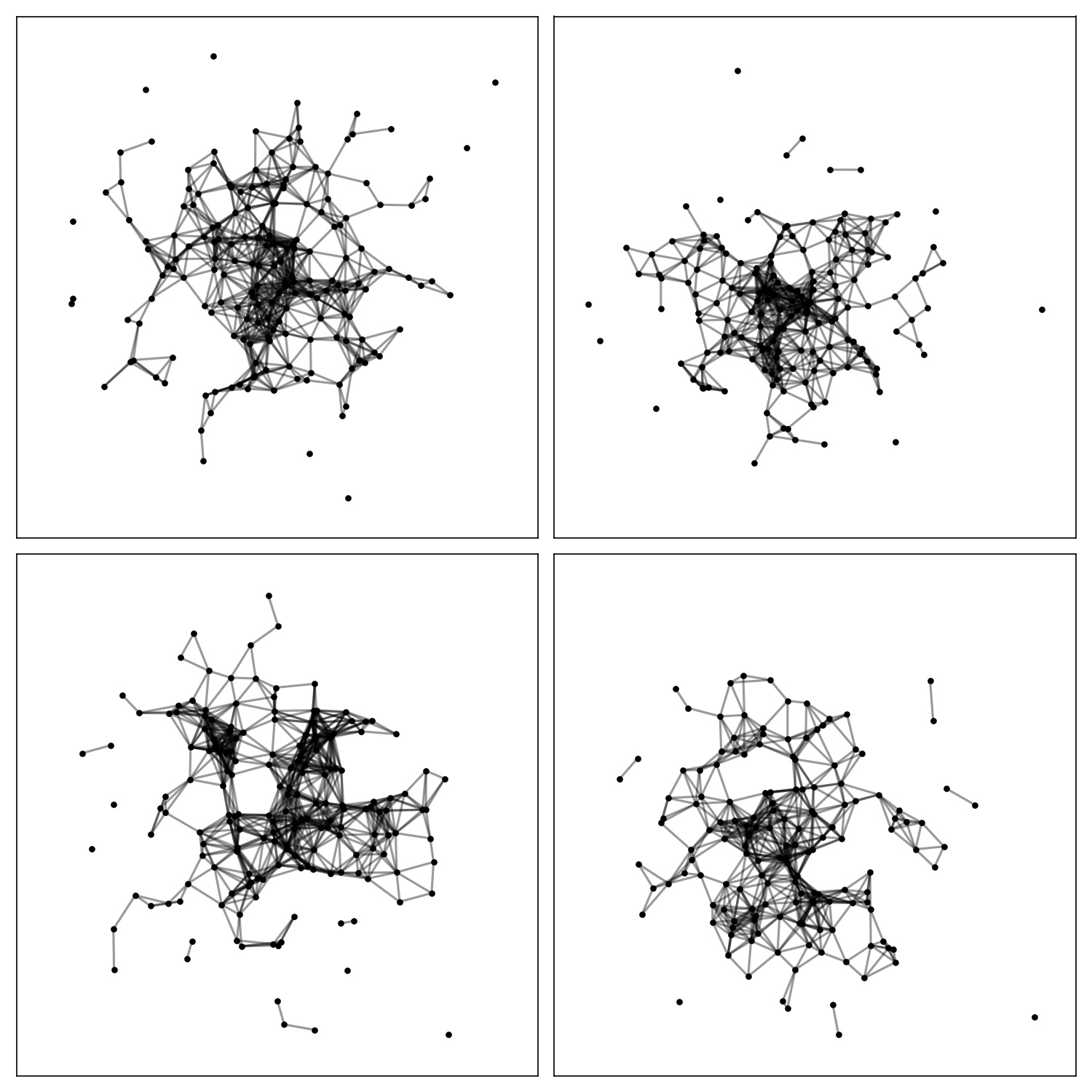

Generating random graphs using strings to seed the number generators.

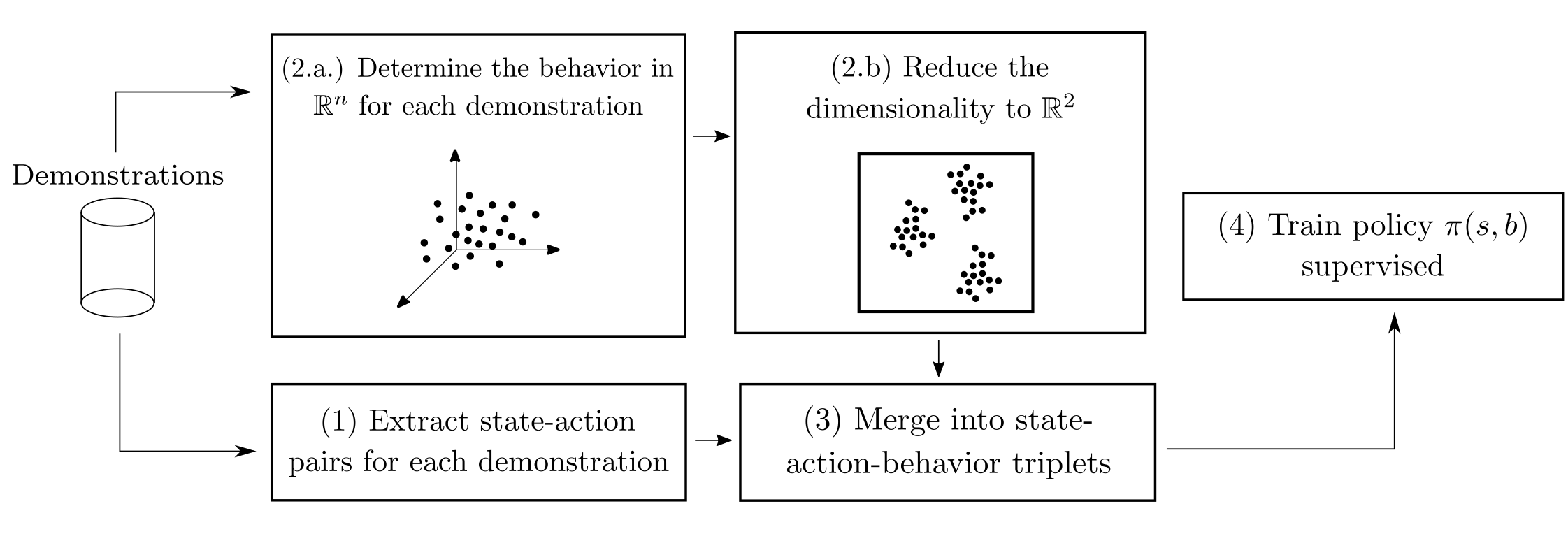

I recently defended my MSc thesis on applications of imitation learning to StarCraft 2. Here is a link to it.